Knowledge graphs unleash the power of enterprise data at scale by allowing organizations to use all the information at their disposal – internal or external, regardless of format, historical or real time, big or small – to fuel pervasive analytics, drive digital transformation initiatives, and build strategic competitive advantage.

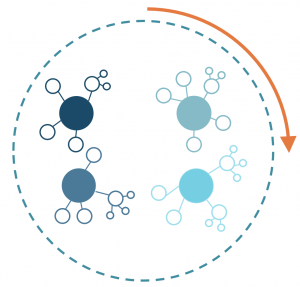

Anzo guides you through a simple five-step process to transform siloed data from enterprise sources

into a scaleable knowledge graph.

Anzo’s built-in data pipelines use parallel processing to load metadata and data from any structured/semi-structured data sources such as RDBMS, CSV, XML, and JSON into graph data models. Natural language processing (NLP) is used to find and extract data from unstructured content such as documents, emails, presentations, and web pages. Anzo’s knowledge graph catalog uses a metadata-driven parallel graph ETL and ELT approaches to load and transform data from dozens to thousands of siloed enterprise data sources into graph data models – the building blocks of the knowledge graph.

Step 1:

Build a catalog of knowledge graphs from existing data sources

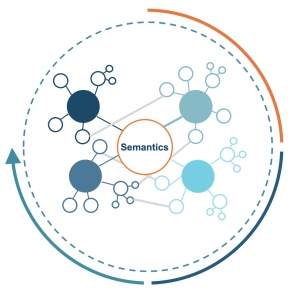

Unlike traditional data models which require a single, universally agreed-upon schema, semantic models flexibly capture the business meaning of all data using the everyday terms and relationships understood by users to identify the data they want to utilize for business-oriented analytics. Anzo supports OWL, an open data standard modeling language used to create semantic models.

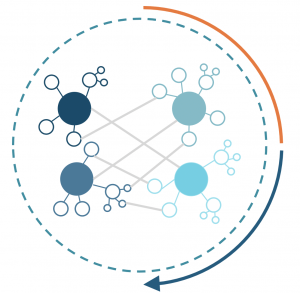

Step 2:

Align the knowledge graphs to semantic models (ontologies) based on business meaning

Auto-generate semantic models from data sources, and then connect and blend multiple knowledge graphs using business-level semantic models. Blend multiple knowledge graphs using semantic models. Anzo can combine and align any cataloged data with its business models, as well as apply data cleansing and/or transformation steps for consistent harmonizing of data at enterprise-scale. Unlike traditional data tools that must generate multiple new copies of data to execute rigid ETL/ELT processes with manual SQL and other coding, Anzo models and manipulates large collections of data in memory in seconds. This breakthrough performance is made possible through AnzoGraph, Anzo’s embedded graph database. AnzoGraph’s in-memory MPP capability rapidly loads data into memory and performs multiple data cleaning and blending activities in seconds.

Step 3:

Lift data sources into automatically derived knowledge graphs

with in-memory transformation.

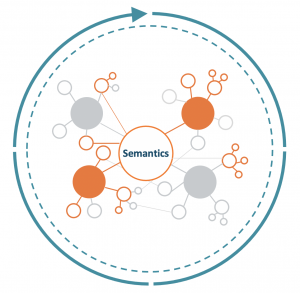

Apply a wide variety of traditional OLAP and graph-specific analytic functions as well as data science, ML, and geospatial functions to support a wide variety of search, analytics, and operational use cases at scale. Anzo lets users easily “pivot” their data exploration and ask entirely new questions without having to reengineer a traditional rigid data schema to accommodate unanticipated lines of inquiry. Users can access and query desired data in Anzo in native graph format or access the knowledge graph from external tools, such as R, JBDC SQL, Tableau and TIBCO Spotfire, using OData or REST endpoints. With Anzo’s agile and iterative approach, you can start small and grow over time, reducing risk and incrementally delivering ROI to the business sooner. Implement your first knowledge graph in a few days by initially focusing on a specific use case and set of data sources. Then expand the solution over time, adding new subject matter domains with associated new sources, user groups, and use cases.

Step 4:

Access and analyze data in the knowledge graph.

Step 5:

Expand your knowledge graphs by selectively repeating earlier steps to add more domains, data, users, and use cases.